WARNING

As of October 2020, there’s now an official feature in Cloud Run to configure static IPs using VPC and NAT. I have written an official guide to set up static outbound IPs. Please do not apply the workaround in this article anymore.

If you are migrating to serverless with Google Cloud Run from your on-premises datacenters, or Google Kubernetes Engine (GKE) clusters, you might notice that Cloud Run has a slightly different networking stack than GCE/GKE.

When accessing endpoints that require “IP whitelisting” (such as Cloud Memorystore, or something on your corporate network) from Cloud Run, you can’t easily have static IPs for your Cloud Run applications. This is because currently you can’t configure Cloud NAT or Serverless VPC Access yet.

Until we support these on Cloud Run, I want to share a workaround (with example code) that involves routing the egress traffic of a Cloud Run application through a GCE instance with a static IP address.

# Architecture

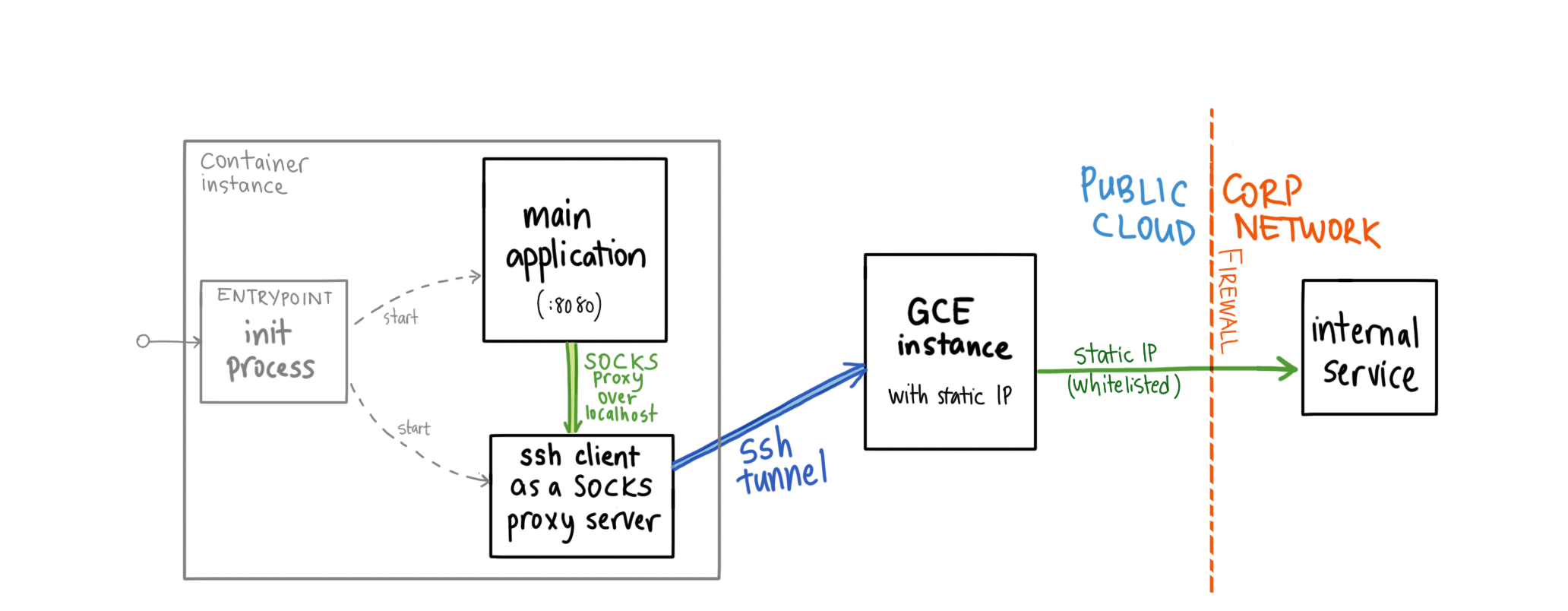

In a nutshell, the solution to this involves the following architecture:

- One (or more) Google Compute Engine instance (Linux VM) that doesn’t have

anything special installed, but has a static external IP. You can use a single

f1-microinstance for this for free (as it’s within the Free Tier).- Alternatively, you can use a 3rd party proxy with static IP such as QuotaGuard.

- Running multiple server processes in a single container:

sshclient connected to the GCE instance over its public IP, using an SSH key. With-Doption, it is used as a SOCKS5 (TCP) proxy server.- your application, now uses the SOCKS5 proxy exposed by

sshclient.

# Solution

You should follow the step-by-step instructions on the GitHub repository and inspect the source code to fully understand the solution.

First, we create a GCE VM running Linux (and therefore a sshd server) but no special set up necessary as default GCE VMs run sshd server.

Then, inside the container, we change our ENTRYPOINT to start two processes and

monitor them (in this case the main app + ssh as a server). This is no

easy feat, and you should be aware of the caveats of running multiple processes

in your container.

We use the OpenSSH client (ssh command) to start a TCP SOCKS5 proxy server

locally (via the -D localhost:5000 option) in a long-running mode (-N

option) to tunnel the traffic via the GCE VM:

ssh -i ./ssh_key "tunnel@${GCE_IP}" \

-N -D localhost:5000 \

-o StrictHostKeyChecking=no

By using the ssh command with a SSH private key we baked into the container

image (although, not very secure), we can SSH into the GCE instance without

installing gcloud in the container image.

In the sample application, we set the HTTPS_PROXY environment variable so that

our HTTP and HTTPS traffic is automatically routed through this proxy:

HTTPS_PROXY=socks5://localhost:5000

The HTTPS_PROXY environment variable (as well as HTTP_PROXY, or lowercase

spellings of these) is well-recognized by many programming languages:

- Go (natively in

net/http.Client) - Python (via

requests[socks]package) - Node.js (via

requestsmodule) - Ruby, Python, …

Not all languages support these environment variables out of the box, so you might need to change your code to use the SOCKS5 proxy.

It’s also worth noting that we have some code in the sample app that waits for

the local proxy to start (by retrying to open a socket to localhost:5000).

# Caveats

You should know that this would be a stop-gap solution until Cloud Run supports the Serverless VPC Access or Cloud NAT.

There are many scalability and security concerns around routing all your traffic

through a single-instance f1-micro VM. Although you can mitigate these by:

- running multiple instances of GCE VMs, and retrying different tunnels if one starts failing

- it’s unlikely that you will saturate the network bandwidth of the GCE instance

before you saturate CPU of your Cloud Run instance, but you can always

provision machine types larger than the smallest

f1-microlike I did. - connecting to the VM with a SSH private key baked into the container image is not great from security perspective. You can use a secrets manager or download the SSH key on the startup from a GCS storage bucket.

Hopefully this article helps you try out Cloud Run while porting your existing applications. Let me know on Twitter if this has helped you.