Today I’m open sourcing runsd, an unofficial DNS service discovery and authentication layer I’ve built for Cloud Run to make microservices networking easier.

If you’ve used Kubernetes or Docker Swarm to run microservices, then you are

probably familiar with the concept of DNS service

discovery.

This lets you call another service as easy as connecting to http://hello.

Your request will be routed to a container running the service named hello

even if it’s on another machine on your cluster.1

However, Cloud Run currently does not offer a DNS service discovery experience and authenticating private services on Cloud Run requires you to make code changes which makes your services less portable (we’ll talk more about this soon).

# Table of Contents

- What does

runsdsolve? - What does

runsddo? - Getting started with

runsd - How does

runsdwork? - Conclusion

#

What does runsd solve?

Two prominent issues led me to develop runsd:

-

Cloud Run currently does not offer DNS service discovery out-of-the-box. As a result, service URLs on Cloud Run are not fully predictable, and they look like2:

{name}-{project_hash}-{region}.a.run.appIt’s not ideal to hardcode this URL, and it makes it even harder to migrate from a platform like VMs, Kubernetes or Docker Swarm, where you have DNS-based service discovery.

-

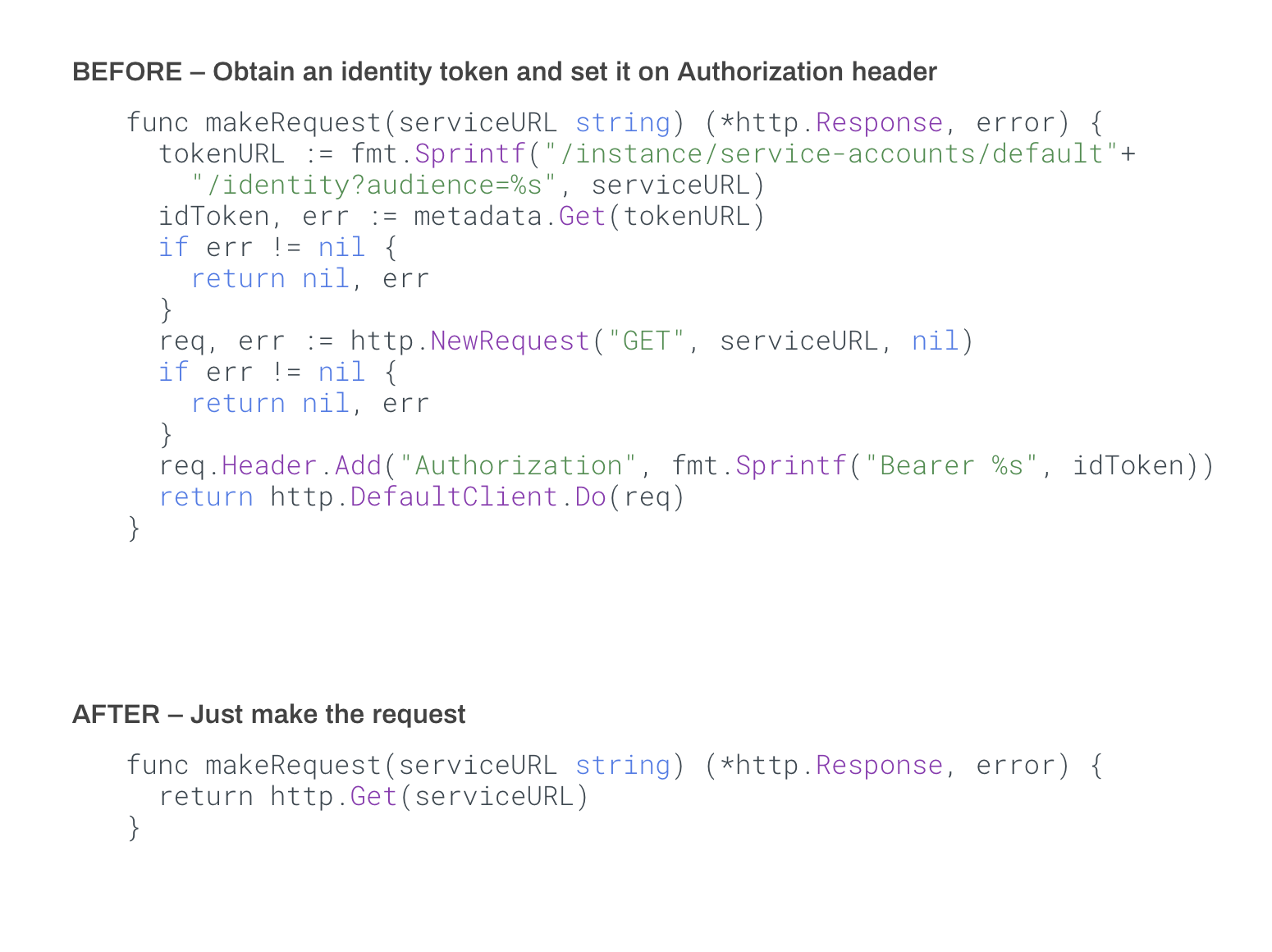

Even if you know the full URL of a service, private Cloud Run services require authentication. To authenticate, you have to modify your code to authenticate by obtaining an identity token (in form of a JWT) and adding it to every request as an HTTP header.3

Having to authenticate means that migrating from Kubernetes/Swarm to Cloud Run will require code change, and you can’t easily run off-the-shelf software on Cloud Run (since you can’t refactor the code).

#

What does runsd do?

It precisely solves the two problems listed above:

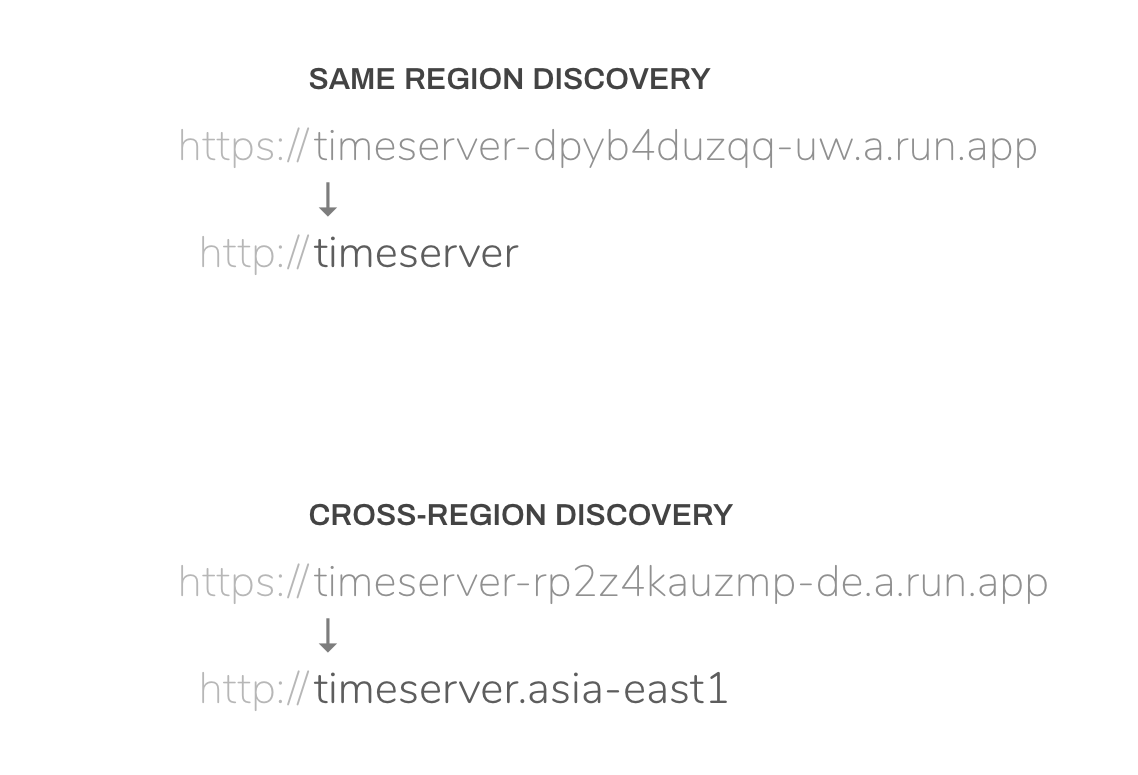

- runsd solves DNS service discovery for Cloud Run by giving you you friendly hostnames to call other Cloud Run services that are in the same project (similar to Cloud Run):

- runsd also automatically authenticates every request that goes to a Cloud Run service, and helps you keep your code free of authentication flow:

#

Getting started with runsd

runsd works as a “drop-in binary” for your container image. You need to update

your container’s original entrypoing with the runsd binary.

For example:

ENTRYPOINT ["/runsd", "--", "python", "server.py"]

Please check out runsd GitHub repository and consider trying out the project and giving feedback.

Now, let’s talk about how I built this tool by combining some tricks from DNS hijacking and in-container HTTP proxying.

# How does runsd work?

runsd is an entrypoint process for your container that runs entirely in userspace, and it does not need any secrets or API access to work.

To make its magic happen, runsd employs several hacks that modify how DNS resolution works inside the container and that intercept the outgoing traffic and modify it.

The architecture diagram below explains how runsd resolves and proxies a request:

Because runsd is the entrypoint, it gets to run before anything else in the

container runs. runsd takes this opportunity to modify the local DNS

resolution of the container environment, by hijacking the /etc/resolv.conf

file and setting the nameservers to 127.0.0.1 (localhost). It also adds

search domains like {region}.run.internal. and run.internal. so that when

you try to lookup hostname “hello”, it appends one of these prefixes and

performs the lookup.

While hacking the /etc/resolv.conf, runsd configures the ndots option so

that the name hello name lookup uses the us-central1.run.internal.

suffix, however, the name lookup hello.eu-west2 uses only the

run.internal. suffix. By doing this, it can route to Cloud Run services

within the same region and other regions.

Next, to serve the DNS queries originating from inside the container, runsd

starts a DNS server that mostly acts as a recursive resolver. This server

mainly handles queries for the DNS zone run.internal. and it forwards the

rest of the DNS queries to the original nameserver used by the Cloud Run

container (that was in the /etc/resolv.conf file prior to hijacking).

The local DNS server runs inside the runsd process. It is implemented using the Go dns package written by Miek Gieben, a Xoogler. (This package is also used in popular projects like CoreDNS.)

Now that we are able to resolve the name hello as

hello.us-central1.run.internal., we need to intercept requests to this

domain. To do that, we resolve all DNS queries for the run.internal. zone

to the IP addresses 127.0.0.1 and ::1 (both meaning “localhost”).

After your application gets a response from the local DNS server, a request

to http://hello results in the client initiating a connection to

localhost:80. To intercept this request, we run a local HTTP reverse proxy

server (on port 80) inside the runsd process, which runs in your container.

Once it intercepts the incoming HTTP request, runsd can now automatically augment this request with the Authentication header (containing the JWT token signed for the target service) and upgrade the connection to HTTPS. Therefore, your requests are still secured as the unencrypted HTTP call doesn’t leave the container before it’s encrypted.

Finally, this proxy sends the augmented request to the real Cloud Run service

(running at *.a.run.app), and proxies the received response back to your

application. This is a somewhat non-trivial reverse proxy implementation in

Go that supports proxying gRPC and WebSockets requests.

This tool might seem hacky, however it employs the similar underlying principles of how DNS service discovery works on Kubernetes, and it aims to give you the same experience as Kubernetes service discovery.

Thankfully, you don’t have to worry about the details of how runsd works. You can just drop runsd in your container image and adjust your entrypoint; it should do its magic as it fades in the background. 😇

# Conclusion

Using runsd, you can easily migrate your microservices from Kubernetes or Docker Swarm to Cloud Run and get the experience that you’re used to in terms of service-to-service networking.

This is an area we’re actively investing at Google, so hopefully this experience will someday be solved without

I hope this article explains why and how I built runsd. Please make sure to check out the GitHub repository, star/fork it and give feedback through issue tracker or on Twitter.

I would like to thank Cloud Run product management team for supporting the development and release of this unofficial tool.

-

On top of DNS service discovery, if you are using something like Istio, it might even do mutual TLS authentication transparently under the covers for you. ↩︎

-

For example, in URL

foo-2wvlk7vg3a-wl.a.run.app, the random hash is a string that’s chosen once for your GCP project, but you don’t know it ahead of time, and the-wlindicates your region code, but these region codes are not offered publicly on a page or API. ↩︎ -

Again, you can solve this by refactoring your code to fetch an identity token (JWT), and add an

Authenticationheader to every outgoing request, but it is a hassle if you’re coming from Kubernetes or a similar platform where you don’t have to do this. ↩︎